OpenAI has launched two new artificial intelligence models featuring enhanced logical reasoning capabilities — gpt-oss-120b and gpt-oss-20b. These models mark the first open-weight releases by the company since GPT-2 was launched over five years ago. Both models are available for free on Hugging Face for developers and researchers eager to create their own solutions.

The models differ in terms of performance and hardware requirements:

- gpt-oss-120b — a larger and more powerful model that can operate on a single NVIDIA GPU;

- gpt-oss-20b — a lightweight version that can run on a standard laptop with 16 GB of RAM.

OpenAI aims to provide an American open AI platform as an alternative to the growing influence of Chinese labs like DeepSeek and Qwen (Alibaba).

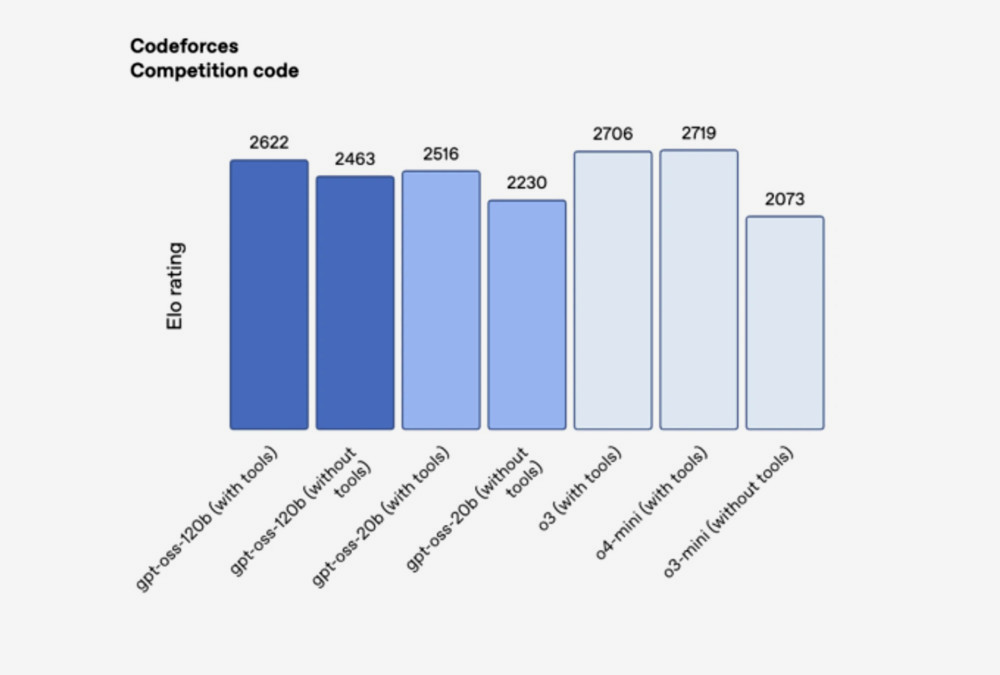

In competitive coding tests on Codeforces, the 120b model scored 2622 points, while the 20b scored 2516, surpassing DeepSeek R1 but falling short compared to closed models like o3 and o4-mini. In the challenging Humanity’s Last Exam (HLE) test, 120b achieved 19%, and 20b reached 17.3%, performing better than other open models but still below o3.

The new models were trained using methodologies similar to those of OpenAI's closed models. They employ a mixture-of-experts (MoE) approach, activating only a portion of parameters for each token to enhance efficiency. Additional RL fine-tuning has enabled the models to develop logical reasoning chains and invoke tools like web search or executing Python code.

The models are text-only and do not generate images or audio. They are released under the Apache 2.0 license, allowing commercial use without OpenAI's approval, though training data remains closed due to copyright risks.

The launch of gpt-oss aims to strengthen OpenAI's position in the developer community and respond to political pressures from the US seeking to enhance the role of open American models in global competition.